After taking a little hiatus from writing I have a backlog of things that I need to catch up on for my blog. The first is a scenario I ran into recently while helping clients and has to deal with X++ and .NET performance and memory usage differences.

Some Background Information

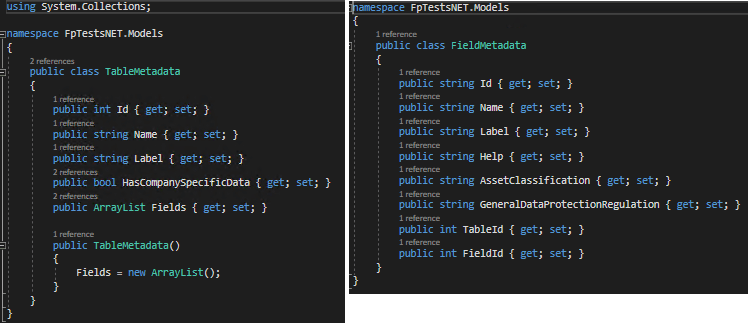

To set up this scenario, within the Fastpath application (an external SAAS-based .NET application hosted in Azure) there is a case where we need to get all table and table field object metadata from the system and return it to our Fastpath solution. This process generates approximately 11,400 table objects and 133,900 table field objects in a default environment.The objects themselves looked like this:

I initially wrote the method to perform this task over 4 years ago (when the product was called AX7). Recently we had customers running into an issue where this piece of code was randomly timing out and would throw the dreaded ‘Unknown Error’. It wasn’t happening for all customers and even for some customers it wouldn’t happen every time, but enough that we had to come up with a different solution.

Original Solution

My original solution used a dictionary object to loop through all tables in the system and then used DictTable and DictField objects to loop through the fields on each table object.

public ArrayList GetTableMetadata()

{

ArrayList tableMetadataList = new ArrayList();

ArrayList fieldMetadataList = new ArrayList();

FpTestsNET.Models.TableMetadata tableMetadata = new FpTestsNET.Models.TableMetadata();

FpTestsNET.Models.FieldMetadata fieldMetadata = new FpTestsNET.Models.FieldMetadata();

Dictionary dict = new Dictionary();

TableId tableId;

TableId = dict.tableNext(0);

while(TableId)

{

if(dict.tableSql(TableId) == true)

{

DictTable table = dict.tableObject(tableId);

tableMetadata.Id = TableId;

tableMetadata.Name = table.name();

tableMetadata.Label = table.label();

DictField field;

FieldId fieldId = table.fieldNext(0);

while(FieldId)

{

fieldMetadata = new FpTestsNET.Models.FieldMetadata();

field = table.fieldObject(FieldId);

if(field.isSql() && !field.isSystem())

{

fieldMetadata.TableId = field.tableid();

fieldMetadata.FieldId = field.id();

fieldMetadata.Name = field.name();

fieldMetadata.Label = field.label();

fieldMetadata.Help = field.help();

fieldMetadataList.add(fieldMetadata);

}

FieldId = table.fieldNext(FieldId);

}

}

TableId = dict.tableNext(TableId);

tableMetadata.Fields = fieldMetadataList;

tableMetadataList.add(tableMetadata);

}

return tableMetadataList;

}

Second Solution

I then found a post by Martin Drab describing a new ‘metadata support’ API that has some internal methods to handle the heavy lifting on some of these types of metadata requests. So I was able to change the method to:

public ArrayList GetTableMetadataNew()

{

ArrayList tableMetadataList = new ArrayList();

ArrayList fieldMetadataList = new ArrayList();

Dictionary dict = new Dictionary();

TableId tableId;

DictTable dt;

DictField df;

Microsoft.Dynamics.AX.Metadata.MetaModel.AxTableField axField;

var tables = Microsoft.Dynamics.Ax.Xpp.MetadataSupport::GetAllTables();

var tableEnum = tables.GetEnumerator();

while(tableEnum.MoveNext())

{

FpTestsNET.Models.TableMetadata tableMetadata = new FpTestsNET.Models.TableMetadata();

Microsoft.Dynamics.AX.Metadata.MetaModel.AxTable table = tableEnum.Current;

tableId = dict.tableName2Id(table.Name);

tableMetadata.Id = tableId;

tableMetadata.Name = table.Name;

tableMetadata.Label = table.Label;

tableMetadata.HasCompanySpecificData = table.SaveDataPerCompany;

dt = dict.tableObject(tableId);

var fieldEnum = table.Fields.GetEnumerator();

while(fieldEnum.MoveNext())

{

FpTestsNET.Models.FieldMetadata fieldMetadata = new FpTestsNET.Models.FieldMetadata();

Microsoft.Dynamics.AX.Metadata.MetaModel.AxTableField field = fieldEnum.Current;

int fieldId = dt.fieldName2Id(field.name);

df = dt.fieldObject(fieldId);

fieldMetadata.FieldId = fieldId;

fieldMetadata.TableId = tableId;

fieldMetadata.Name = field.name;

fieldMetadata.Label = field.label;

fieldMetadata.Help = field.HelpText;

fieldMetadata.AssetClassification = field.AssetClassification;

fieldMetadata.GeneralDataProtectionRegulation = enum2Str(field.GeneralDataProtectionRegulation);

fieldMetadataList.Add(fieldMetadata);

}

tableMetadata.Fields = fieldMetadataList;

tableMetadataList.add(tableMetadata);

}

return tableMetadataList;

}

Third Solution

I then took the code from above and moved it into a .NET project just to see if there was a performance or memory difference there:

public static ArrayList GetTableMetadata()

{

Dictionary dict = new Dictionary();

DictTable dt;

DictField df;

var tableList = new ArrayList();

var tables = MetadataSupport.GetAllTables();

foreach (var table in tables)

{

TableMetadata tm = new TableMetadata();

if (table.TableType == Microsoft.Dynamics.AX.Metadata.Core.MetaModel.TableType.Regular)

{

int tableId = dict.Tablename2id(table.Name);

tm.Id = tableId;

tm.Name = table.Name;

tm.Label = LabelHelper.GetLabel(table.Label);

if (table.SaveDataPerCompany == Microsoft.Dynamics.AX.Metadata.Core.MetaModel.NoYes.Yes)

tm.HasCompanySpecificData = true;

else

tm.HasCompanySpecificData = false;

dt = dict.Tableobject(tableId);

foreach (var field in table.Fields)

{

int fieldId = dt.Fieldname2id(field.Name);

df = (DictField)dt.Fieldobject(fieldId);

FieldMetadata fm = new FieldMetadata();

fm.Id = tableId + "|" + fieldId;

fm.FieldId = fieldId;

fm.TableId = tableId;

fm.Name = field.Name;

fm.Label = LabelHelper.GetLabel(df.label());

fm.Help = LabelHelper.GetLabel(field.HelpText);

fm.AssetClassification = field.AssetClassification;

fm.GeneralDataProtectionRegulation = Enum.GetName(typeof(Microsoft.Dynamics.AX.Metadata.Core.MetaModel.GeneralDataProtectionRegulation), field.GeneralDataProtectionRegulation);

tm.Fields.Add(fm);

}

tableList.Add(tm);

}

}

return tableList;

}

Does it Help Performance and Memory Usage?

So the second/third solutions above are obviously easier to read but does using these libraries actually help with performance and memory usage?

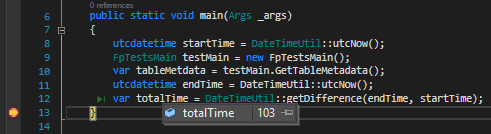

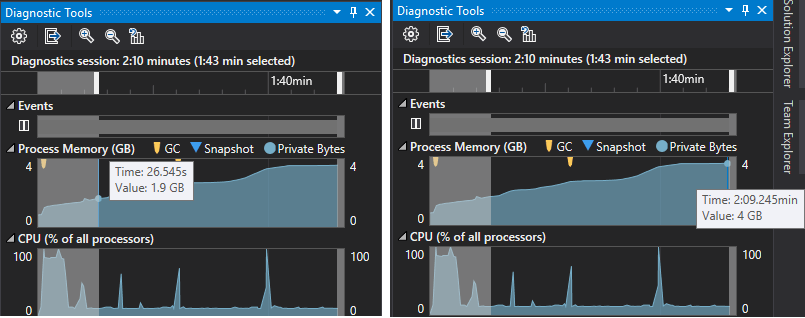

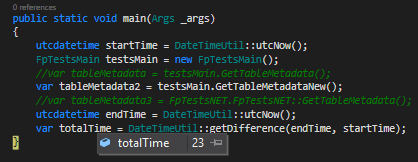

I set up a little test application to try this out, in my original solution it took 1 minutes 43 seconds to complete and used over 2 GB of RAM.

When I moved my solution over to utilizing the MetadataSupport libraries, the time it took dropped to only 23 seconds and uses only 1.5 GB of memory.

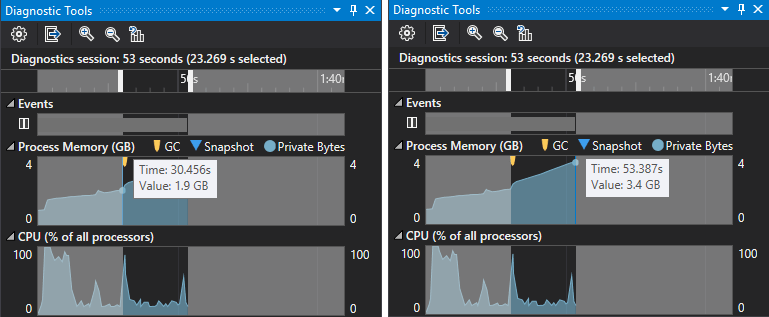

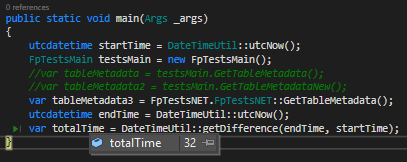

For the final test I took the same code from the second scenario above and tried it in a .NET solution just to test the .NET vs X++ performance and found that while it took 32 seconds it only used just over 1 GB of memory.

Conclusion

There are a couple takeaways that I had from going through these tests:

- When the platform you are developing on changes as fast as D365FO does, always be on the lookout for more efficient ways to do things. Keeping things the same may have worked in the AX 2012 world but not anymore.

- A performance increase when using the MetadataSupport libraries was expected but the amount of performance increase was very unexpected for me (23 secs vs 1 min 43 secs -> 78% improvement)

- But the bigger takeaway for me was the memory usage difference between X++ and .NET when utilizing the MetadataSupport libraries (2 GB for X++ vs 1.1 GB for .NET -> 45% improvement) Since the D365FO runtime gets compiled down to .NET I would have expected almost no difference in performance or memory usage. I have seen this memory usage difference in other tasks as well when dealing with large collections of objects and it is one of the reasons that whenever I’m doing large data operations I always try and do them in .NET but I have never been able to understand why that is. Would be great to get some feedback from some #XppGroupies that could give a deep dive into this.

Also just to note, I do know that there is a Memory Usage tab in Visual Studio Diagnostic Tools that would give me more insight into how the memory is being used. Unfortunately any time I try to use that Visual Studio crashes so I had to come up with a different approach for measuring memory usage. Other things that make my Visual Studio crash when doing X++ programming include:

- Right clicking anywhere in code

- Trying to put two coding windows side by side

- Trying to get form designer to refresh or sometimes just going to the design tab of a form

- Trying to do a rebuild of a solution too quickly after it failed

- The list can go on… but i digress

Update (3/11/2021)

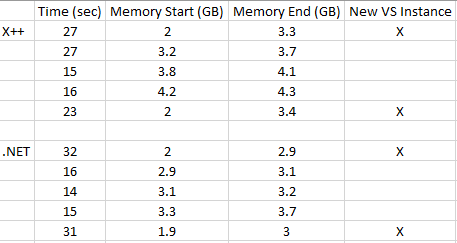

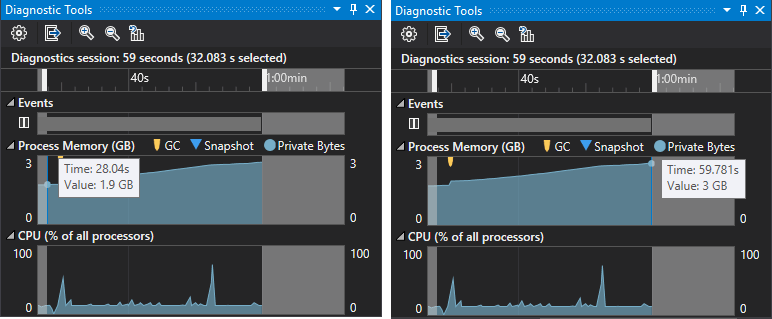

After some discussions on this from a LinkedIn post I actually have to revise some of my performance testing I did above. Initially during my testing to try and limit the variance between tests, I would always use a new instance of Visual Studio. I found that if I ran multiple tests in one Visual Studio instance that the performance and memory usage between the X++ solution and .NET solution using the MetadataSupport library solution was much closer and in line with what I would expect. Here is the additional testing data I generated: